Storage systems should provide the fault tolerance if any disk failures occurs in disk-shelf’s. NetApp uses the RAID-DP technology to provide the fault tolerance. A RAID group includes several disks that are linked together in a storage system. Although there are different implementations of RAID, Data ONTAP supports only RAID 4 and RAID-DP. Data ONTAP classifies disks as one of four types for RAID: data, hot spare, parity, or double-parity. The RAID disk type is determined by how RAID is using a disk.

| Data disk | A data disk is part of a RAID group and stores data on behalf of the client. |

| Hot spare disk | A hot spare disk does not hold usable data but is available to be added to a RAID group in an aggregate. Any functioning disk that is not assigned to an aggregate, but is assigned to a system, functions as a hot spare disk. |

| Parity disk | A parity disk stores data reconstruction within a RAID group. |

| Double-parity disk | A double-parity disk stores double-parity information within RAID groups if NetApp RAID software, double-parity (RAID-DP) is enabled. |

RAID-4 :

RAID-4 Protects the data from single disk failure. It requires minimum three disk to configure. (2 – Data disks & 1 – Parity disk)

Using RAID 4, if one disk block goes bad, the parity disk in that disk’s RAID group is used to recalculate the data in the failed block, and then the block is mapped to a new location on the disk. If an entire disk fails, the parity disk prevents any data from being lost. When the failed disk is replaced, the parity disk is used to automatically recalculate its contents. This is sometimes referred to as row parity.

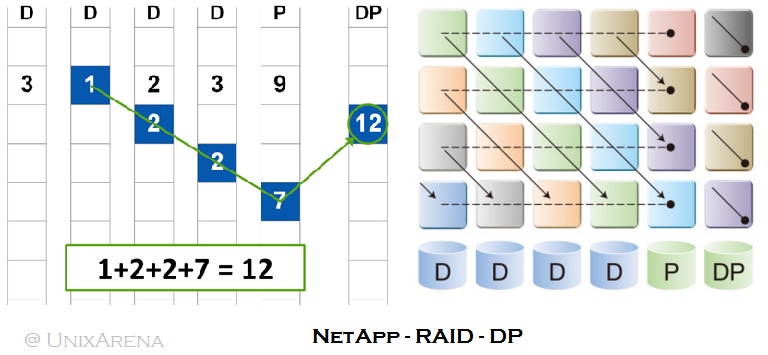

RAID-DP:

RAID-DP technology protects against data loss due to a double-disk failure within a RAID group.

Each RAID-DP group contains the following:

- Three data disks

- One parity disk

- One double-parity disk

RAID-DP employs the traditional RAID 4 horizontal row parity. However, in RAID-DP, a diagonal parity stripe is

calculated and committed to the disks when the row parity is written.

RAID GROUP MAXIMUMS:

Here is the RAID group Maximums for NetApp Storage systems. RAID groups can include anywhere from 3 to 28 disks, depending on the platform and RAID type. For best performance and reliability, NetApp recommends using the default RAID group size.

NetApp RAID Maximums

Aggregates:

An aggregate is virtual layer of RAID groups. RAID is built using the bunch of physical disks . Aggregates can use RAID-4 raid groups or RAID-DP raid groups. These aggregates can be taken over by the HA partner if the controller fails. Aggregates can be grown up by adding the physical disks to it (In the back-end , it will form the RAID groups). There are two type of aggregates possible in NetApp.

- 32-Bit Aggregate

- 64-Bit Aggregate

At any time, you can convert the 32-Bit Aggregate to 64-Bit aggregate without any downtime. 64-Bit aggregate supports more than 16TB of storage.

Let’s see the storage aggregate commands.

1.Login to the NetApp Cluster’s LIF.

2.List the available storage aggregates on the system.

NetUA::> storage aggregate show

Aggregate Size Available Used% State #Vols Nodes RAID Status

--------- -------- --------- ----- ------- ------ ---------------- ------------

aggr0_01 900MB 43.54MB 95% online 1 NetUA-01 raid_dp,

normal

aggr0_02 900MB 43.54MB 95% online 1 NetUA-02 raid_dp,

normal

2 entries were displayed.

NetUA::>

3.List the Provisioned volumes with aggregate names . vol0 resides on aggregate “aggr0_01”.

NetUA::> volume show Vserver Volume Aggregate State Type Size Available Used% --------- ------------ ------------ ---------- ---- ---------- ---------- ----- NetUA-01 vol0 aggr0_01 online RW 851.5MB 431.0MB 49% NetUA-02 vol0 aggr0_02 online RW 851.5MB 435.9MB 48% 2 entries were displayed. NetUA::>

4.List the available disks on Node NetUA-01. Here you can see that what are the disks are part of aggregate.

NetUA::> storage disk show -owner NetUA-01

Usable Container

Disk Size Shelf Bay Type Position Aggregate Owner

---------------- ---------- ----- --- ----------- ---------- --------- --------

NetUA-01:v4.16 1020MB - - spare present - NetUA-01

NetUA-01:v4.17 1020MB - - spare present - NetUA-01

NetUA-01:v4.18 1020MB - - spare present - NetUA-01

NetUA-01:v4.19 1020MB - - spare present - NetUA-01

NetUA-01:v4.20 1020MB - - spare present - NetUA-01

NetUA-01:v4.21 1020MB - - spare present - NetUA-01

NetUA-01:v4.22 1020MB - - spare present - NetUA-01

NetUA-01:v4.24 1020MB - - spare present - NetUA-01

NetUA-01:v4.25 1020MB - - spare present - NetUA-01

NetUA-01:v4.26 1020MB - - spare present - NetUA-01

NetUA-01:v4.27 1020MB - - spare present - NetUA-01

NetUA-01:v4.28 1020MB - - spare present - NetUA-01

NetUA-01:v4.29 1020MB - - spare present - NetUA-01

NetUA-01:v4.32 1020MB - - spare present - NetUA-01

NetUA-01:v5.16 1020MB - - aggregate dparity aggr0_01 NetUA-01

NetUA-01:v5.17 1020MB - - aggregate parity aggr0_01 NetUA-01

NetUA-01:v5.18 1020MB - - aggregate data aggr0_01 NetUA-01

NetUA-01:v5.19 1020MB - - spare present - NetUA-01

NetUA-01:v5.20 1020MB - - spare present - NetUA-01

NetUA-01:v5.21 1020MB - - spare present - NetUA-01

NetUA-01:v5.22 1020MB - - spare present - NetUA-01

NetUA-01:v5.24 1020MB - - spare present - NetUA-01

NetUA-01:v5.25 1020MB - - spare present - NetUA-01

NetUA-01:v5.26 1020MB - - spare present - NetUA-01

NetUA-01:v5.27 1020MB - - spare present - NetUA-01

NetUA-01:v5.28 1020MB - - spare present - NetUA-01

NetUA-01:v5.29 1020MB - - spare present - NetUA-01

NetUA-01:v5.32 1020MB - - spare present - NetUA-01

NetUA-01:v6.16 520.5MB - - spare present - NetUA-01

NetUA-01:v6.17 520.5MB - - spare present - NetUA-01

NetUA-01:v6.18 520.5MB - - spare present - NetUA-01

NetUA-01:v6.19 520.5MB - - spare present - NetUA-01

NetUA-01:v6.20 520.5MB - - spare present - NetUA-01

NetUA-01:v6.21 520.5MB - - spare present - NetUA-01

NetUA-01:v6.22 520.5MB - - spare present - NetUA-01

NetUA-01:v6.24 520.5MB - - spare present - NetUA-01

NetUA-01:v6.25 520.5MB - - spare present - NetUA-01

NetUA-01:v6.26 520.5MB - - spare present - NetUA-01

NetUA-01:v6.27 520.5MB - - spare present - NetUA-01

NetUA-01:v6.28 520.5MB - - spare present - NetUA-01

NetUA-01:v6.29 520.5MB - - spare present - NetUA-01

NetUA-01:v6.32 520.5MB - - spare present - NetUA-01

42 entries were displayed.

NetUA::>

5.Let’s look at the specific aggregate’s configuration.

NetUA::> stor aggr show -aggr aggr0_01

(storage aggregate show)

Aggregate: aggr0_01

Checksum Style: block

Number Of Disks: 3

Nodes: NetUA-01

Disks: NetUA-01:v5.16,

NetUA-01:v5.17,

NetUA-01:v5.18

Free Space Reallocation: off

HA Policy: cfo

Space Reserved for Snapshot Copies: -

Hybrid Enabled: false

Available Size: 43.49MB

Checksum Enabled: true

Checksum Status: active

Has Mroot Volume: true

Has Partner Node Mroot Volume: false

Home ID: 4079432749

Home Name: NetUA-01

Total Hybrid Cache Size: 0B

Hybrid: false

Inconsistent: false

Is Aggregate Home: true

Max RAID Size: 16

Flash Pool SSD Tier Maximum RAID Group Size: -

Owner ID: 4079432749

Owner Name: NetUA-01

Used Percentage: 95%

Plexes: /aggr0_01/plex0

RAID Groups: /aggr0_01/plex0/rg0 (block)

RAID Status: raid_dp, normal

RAID Type: raid_dp

Is Root: true

Space Used by Metadata for Volume Efficiency: 0B

Size: 900MB

State: online

Used Size: 856.5MB

Number Of Volumes: 1

Volume Style: flex

NetUA::>

aggr0_01 is configured using “/aggr0_01/plex0/rg0” .

Let’s have a close look of rg0.

NetUA::> system node run -node NetUA-01 aggr status aggr0_01 -r

Aggregate aggr0_01 (online, raid_dp) (block checksums)

Plex /aggr0_01/plex0 (online, normal, active)

RAID group /aggr0_01/plex0/rg0 (normal, block checksums)

RAID Disk Device HA SHELF BAY CHAN Pool Type RPM Used (MB/blks) Phys (MB/blks)

--------- ------ ------------- ---- ---- ---- ----- -------------- --------------

dparity v5.16 v5 ? ? FC:B - FCAL 15000 1020/2089984 1027/2104448

parity v5.17 v5 ? ? FC:B - FCAL 15000 1020/2089984 1027/2104448

data v5.18 v5 ? ? FC:B - FCAL 15000 1020/2089984 1027/2104448

NetUA::>

Aggregate Name = aggr0_01

Node Name = NetUA-01

We have explored more things about existing aggregate. Let’s see that how to create the new aggregate.

Creating the New Aggregate:

1. To create the aggregate with name “NetUA01_aggr1” on node “NetUA-01” with 5 FCAL disks , use the following command.

NetUA::> stor aggr create -aggr NetUA01_aggr1 -node NetUA-01 -diskcount 5 -disktype FCAL (storage aggregate create) [Job 80] Job succeeded: DONE NetUA::>

2. Verify the newly created aggregate.

NetUA::> storage aggregate show

Aggregate Size Available Used% State #Vols Nodes RAID Status

--------- -------- --------- ----- ------- ------ ---------------- ------------

NetUA01_aggr1

2.64GB 2.64GB 0% online 0 NetUA-01 raid_dp,

normal

aggr0_01 900MB 39.08MB 96% online 1 NetUA-01 raid_dp,

normal

aggr0_02 900MB 43.54MB 95% online 1 NetUA-02 raid_dp,

normal

3 entries were displayed.

NetUA::>

Adding new disks to Aggregate:

1.Add two disks to the aggregate “NetUA01_aggr1” .

NetUA::> aggr add-disks -aggr NetUA01_aggr1 -disktype FCAL -diskcount 2

2.Verify the storage size.

NetUA::> storage aggregate show

Aggregate Size Available Used% State #Vols Nodes RAID Status

--------- -------- --------- ----- ------- ------ ---------------- ------------

NetUA01_aggr1

4.39GB 4.39GB 0% online 0 NetUA-01 raid_dp,

normal

aggr0_01 900MB 43.51MB 95% online 1 NetUA-01 raid_dp,

normal

aggr0_02 900MB 43.54MB 95% online 1 NetUA-02 raid_dp,

normal

3 entries were displayed.

3. Verify the newly added disks.

NetUA::> aggr show -aggr NetUA01_aggr1 Aggregate: NetUA01_aggr1 Checksum Style: block Number Of Disks: 7 Nodes: NetUA-01 Disks: NetUA-01:v4.16, NetUA-01:v5.19, NetUA-01:v4.17, NetUA-01:v5.20, NetUA-01:v4.18, NetUA-01:v5.21, NetUA-01:v4.19 Free Space Reallocation: off HA Policy: sfo Space Reserved for Snapshot Copies: - Hybrid Enabled: false Available Size: 4.39GB Checksum Enabled: true Checksum Status: active Has Mroot Volume: false Has Partner Node Mroot Volume: false Home ID: 4079432749 Home Name: NetUA-01 Total Hybrid Cache Size: 0B Hybrid: false Inconsistent: false Is Aggregate Home: true Max RAID Size: 16 Flash Pool SSD Tier Maximum RAID Group Size: - Owner ID: 4079432749 Owner Name: NetUA-01 Used Percentage: 0% Plexes: /NetUA01_aggr1/plex0 RAID Groups: /NetUA01_aggr1/plex0/rg0 (block) RAID Status: raid_dp, normal RAID Type: raid_dp Is Root: false Space Used by Metadata for Volume Efficiency: 0B Size: 4.39GB State: online Used Size: 180KB Number Of Volumes: 0 Volume Style: flex NetUA::>

4. Check the RAID status.

NetUA::> system node run -node NetUA-01 aggr status NetUA01_aggr1 -r

Aggregate NetUA01_aggr1 (online, raid_dp) (block checksums)

Plex /NetUA01_aggr1/plex0 (online, normal, active)

RAID group /NetUA01_aggr1/plex0/rg0 (normal, block checksums)

RAID Disk Device HA SHELF BAY CHAN Pool Type RPM Used (MB/blks) Phys (MB/blks)

--------- ------ ------------- ---- ---- ---- ----- -------------- --------------

dparity v4.16 v4 ? ? FC:B - FCAL 15000 1020/2089984 1027/2104448

parity v5.19 v5 ? ? FC:B - FCAL 15000 1020/2089984 1027/2104448

data v4.17 v4 ? ? FC:B - FCAL 15000 1020/2089984 1027/2104448

data v5.20 v5 ? ? FC:B - FCAL 15000 1020/2089984 1027/2104448

data v4.18 v4 ? ? FC:B - FCAL 15000 1020/2089984 1027/2104448

data v5.21 v5 ? ? FC:B - FCAL 15000 1020/2089984 1027/2104448

data v4.19 v4 ? ? FC:B - FCAL 15000 1020/2089984 1027/2104448

NetUA::>

We have just created the aggregate layer. In the upcoming articles, we will see that how to create the vserver and flex volumes.

Hope this article is informative to you.

Leave a Reply